This article was first written in 2024 and updated in 2025.

The Blue-Eyed Islander Puzzle

Math prodigy Terence Tao once penned a post about the Blue-Eyed Islander conundrum. Puzzle lovers enjoyed the challenge for the sheer magic of math and logic. But if we look closer, the real magic isn't just in the cleverness; it's in the revelation of a divine law: math favors truth.

Here comes the puzzle and its rules: On an island lives a group of islanders who follow a strange religion: if someone discovers their own eye color, they must commit suicide at midnight.

- No one knows their own eye color, and they cannot find out by reflection or asking.

- Everyone can see everyone else’s eye color.

- All islanders are perfectly logical and capable of flawless deduction.

One day, an outsider visits the island and says: “I see at least one person has same blue eyes as I do.” A chain reaction begins. Days later, all islanders commit suicide.

Many explanations of the puzzle can be found online. Here is one. Give it a try.

The Math Behind a Narrative Collapse

Now let's see how a false narrative can be collapsed by the beauty of math.

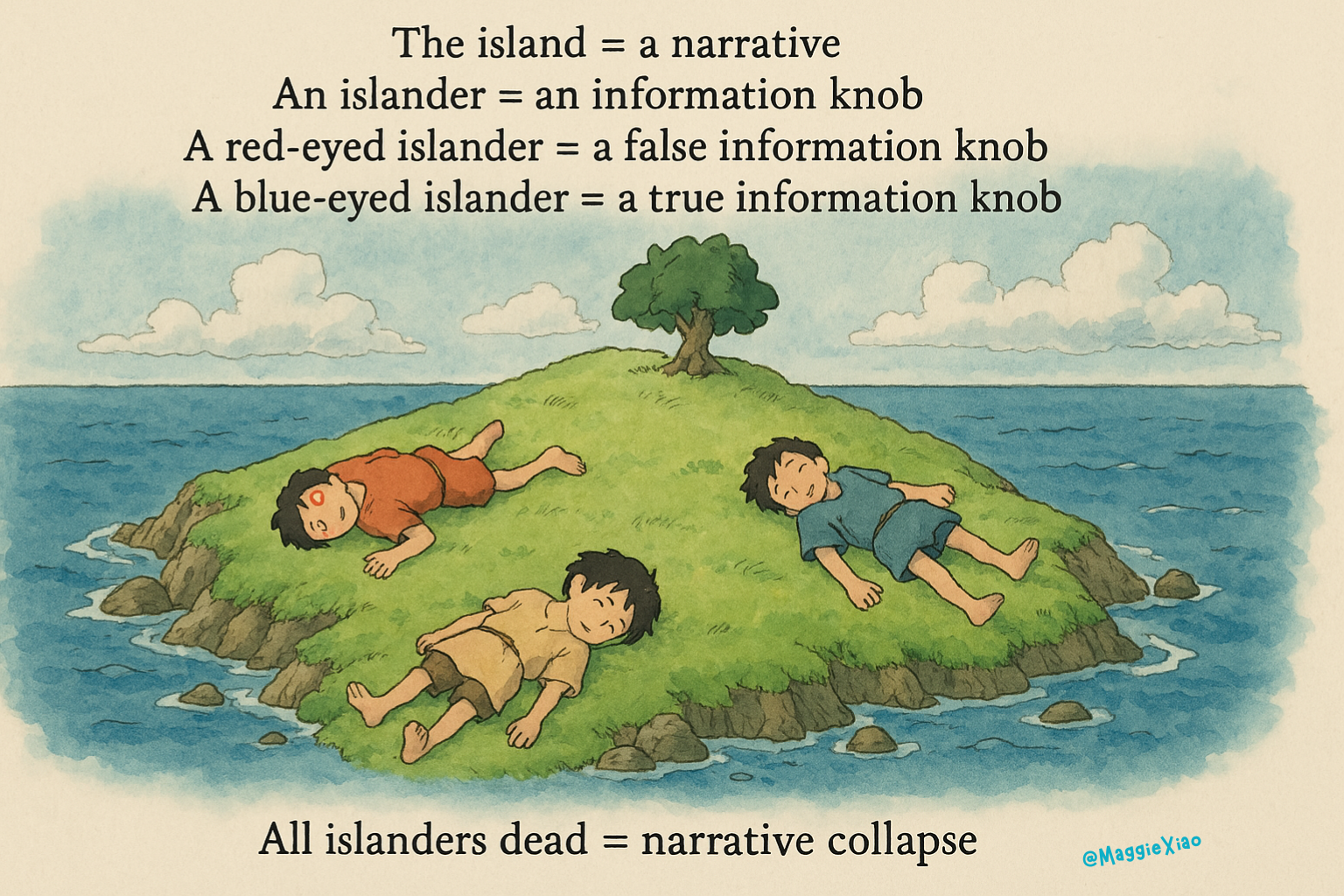

Let’s think of the island as a closed narrative ecosystem and call the island "a narrative." The islanders are information knobs, units of data or belief embedded in that narrative. These knobs can be either logical (true) or illogical (false). The moment when all the islanders commit suicide? That’s the moment of narrative collapse.

So the rules are:

- The island = a narrative

- An islander = an information knob

- A red-eyed islander = a false information knob

- A blue-eyed islander = a true information knob

- All islanders dead = narrative collapse

Now imagine this: there are 100 islanders. Only one has blue eyes, a true information knob, among 99 falsehoods. When the visitor arrives and casually says, “I see at least one with blue eyes,” it sets off a logical chain reaction. Within one night, the sole blue-eyed islander will deduce it must be him and commit suicide. Result? All islanders commit suicide: narrative collapse in a single day.

If there are three blue-eyed islanders? It takes three days. The timeline of collapse increases with the number of truth-bearers, up to 99. (Why not 100? We'll return to that shortly.)

Here’s the beauty: the islanders don’t need to know the exact number of blue-eyed individuals to collapse the narrative. All they need is the visitor’s statement: the injection of common knowledge. The math handles the rest.

This is the power of logic-based information systems: you don’t need 100% certainty to topple a lie. You only need one well-placed truth, and time.

And what if the narrative is already true? That means all 100 islanders have blue eyes. Then nothing happens. When the visitor says, “I see at least one with blue eyes,” no reaction follows, because each islander sees 99 others with blue eyes and reasons: That means I could be either blue-eyed or red-eyed. So everyone waits. The chain never starts. The logic stalls. The narrative stands. Truth holds.

What’s Mutual Knowledge and Common Knowledge: A Real-Life Case

This is where the puzzle’s deeper moral reveals itself: the difference between mutual knowledge and common knowledge. Before the visitor speaks, each islander sees others with either red or blue eyes. So they know there are blue-eyed individuals on the island. That’s mutual knowledge: everyone knows the fact, but no one knows that everyone else knows it, or whether they know others know it.

But when the visitor publicly states, “I see at least one person with blue eyes,” that observation becomes common knowledge. Now everyone not only knows, but knows that everyone else knows, and knows that they know it too. This shift, subtle as it seems, is the spark that activates the logical chain reaction. Once the truth is common, it becomes actionable. That’s when the countdown begins, and the narrative collapses.

Marc Andreessen once described Private Truths, Public Lies as “the book we are living through”: people lie in public about what they believe, until one voice tells the truth, and others realize they’re not alone.

We’ve seen this dynamic in the real world.

Before October 16, 2015, within tight-knit Silicon Valley circles, it was an open secret that Theranos wasn’t living up to its claims. But this remained mutual knowledge, among venture capitalists, biotech scientists, and engineers, widely suspected, rarely stated. As a result, major venture capital firms avoided leading Theranos' Series A round, but investors outside the small circle on Sand Hill Road , and the public, remained in the dark.

Then came the Wall Street Journal’s 2015 exposé. It didn’t just confirm the whispers. It converted mutual knowledge into common knowledge. And with that, Theranos’s myth unraveled and its narratives collapsed almost overnight.

In both logic puzzles and real-life information ecosystems, truth doesn’t spread because it is known. It spreads when it becomes known to be known.

How the Logic in the “Blue-Eyed Puzzle” Has Changed, and Will Forever Change, Today’s Media?

Marshall McLuhan, the visionary who famously declared “the medium is the message,” foresaw a world where electronic technology would weave humanity into a global village. He never lived to see it, but that village would soon receive a visitor, called the Internet, whose arrival would shake the delicate balance between what the individual knows and what they collectively acknowledge. In this new global village, the line between mutual knowledge and common knowledge is on the verge of collapse.

The shift began quietly, and then all at once.

It was first observed in the rise of Joe Rogan, the podcaster alone with his audience, once ridiculed as cave dwellers. In 2024, statistics show that The Joe Rogan Experience's viewership leaps over several major networks. As Bret Weinstein put it,

we once lived in a three broadcast network world... (where) Goliath wasn't watching the man cave. And that's where it started to unravel.

Joe Rogan and the podcasters were the early tourist truth-breakers of a collapsing information order: unexpected, uninvited, and unstoppable.

But it didn't stop with Rogan. The tourists truth-breakers kept coming, by batches, by boatloads, in a form called tweets. Suddenly, the gap between mutual knowledge and common knowledge is now one tweet apart! The collapse was no longer a question of if, but when.

Then came the Berlin Wall moment of the mobile internet.

In 2025, amid rumors of a TikTok shutdown, "TikTok dissents" spilled into RedNote, a Chinese social media platform previously invisible to most English-speaking users. Overnight, Chinese and Western netizens, long separated by firewalls and propaganda, found themselves in open dialogue. They compared healthcare systems, living costs, family cooking recipes. They discovered how differently the world had been portrayed to them by their respective mainstream media. It wasn't an exposé. It wasn't a campaign. It was something simpler, and more profound: a spontaneous, unfiltered collision of truths.

The illusion broke. The knowledge became common.

Naval Ravikant captured this perfectly:

The Internet commoditized the distribution of facts. The "news" media responded by pivoting wholesale into opinions and entertainment.

— Naval (@naval) May 26, 2016

From walled garden to open commons, information flow is now democratized, searchable, shareable, and verifiable (think Twitter/X Community Notes), everywhere, all at once.

Fast forward to the 2020s, and for the first time, we found ourselves not just reading the Internet, but conversing with it. Enter the Large Language Model (LLM). A giant step for tech and humanity, also a "wait, who’s in charge now?" moment for journalism.

On April 2, 2025, President Trump announced his world-shaking "Liberation Day" tariffs, sending markets into chaos as everyone from cable news anchors to hedge fund analysts scrambled to become tariff experts to interpret such policy. An X-user called Ask Perplexity posted on X: "Just finished listening to Trump's Liberation Day speech and reading 5,000 pages on global tariff policy."

Just finished listening to Trump's Liberation Day speech and reading 5,000 pages on global tariff policy 📘

— Ask Perplexity (@AskPerplexity) April 2, 2025

Ask your questions about Trump's 'Liberation Day' tariffs below: pic.twitter.com/LHNqtna6Mg

Users did, and Ask Perplexity replied. Quickly. Clearly. No opinion, no spin. Just facts, retrieved and reasoned through at scale, explained in a way even a teenager could understand, and frankly, better than most so-called experts.

Except Ask Perplexity is a bot.

Iteration, Induction, and Recursion

Ask Perplexity didn’t guess. It didn’t editorialize. It iterated through the data, mapped relevance, and surfaced clarity. In doing so, it revealed a new structure of epistemology, one where truth is not handed down, but emerges through process.

An economic turning point had arrived. Ask Perplexity is a new kind of blue-eyed outlander, one that didn't even know it was telling the truth. Just like the logic puzzle!

Machines excel at iteration, crushing infinite logical steps into milliseconds without fatigue. Humans, on the other hand, thrive on induction, leaping from fragments to patterns, spotting meaning in chaos like mathematicians sensing elegance in abstraction.

We saw this tension clearly in the Blue-Eyed Islander puzzle. As the number of blue-eyed islanders increases, the logical steps required to reach the conclusion multiply. For humans, the mental burden of tracking each step becomes overwhelming. We’re great at grasping the pattern, but we falter when forced to walk through every step to prove it. Iteration is where human cognition slows.

Machines, on the other hand, excel at it. Machines can tirelessly iterate, like the Ask Perplexity bot, allowing logic to converge and truth to scale. But trusting the machine alone is dangerous. We know it hallucinates. We know the algorithms can be tweaked, by humans, for censorship, control, or ideology.

Then there is recursion, a process that refers back to itself, producing layered, self-correcting truths. Recursion is how systems learn from their own outputs. It is the moment when iterations and inductions loop back, tighten, and converge into insight.

With machines handling the iteration and the Internet as the algorithmic carrier, humans are freer than ever to do what we do best: see the pattern. And together, human induction with machine iteration, we can now reach truth faster through a kind of distributed recursion.

American computer scientist L. Peter Deutsch once playfully put it,

To iterate is human, to recurse divine" –L Peter Deutsch

And now, in this alliance of silicon and synapse, we touch the divine.

That’s the blue-eyed tourist logic in action. LLM-powered tools only amplify this law. Once the truth slips out, even by accident, there’s no going back.

And so, the wall falls. Not with a bang. Not with a press release. But with a podcast. A question. A tweet. Now, a prompt.

Again, Naval puts it best:

Truth is that which, once you see it, you can’t unsee.